Parallel Geturl

Keith Vetter 2004-03-06 : Here's the problem: I needed to download about several thousand web pages in a way that wouldn't take all night and day. (FYI these pages are maps from Terraserver--see also [L1 ] and TkTopoMap). Each page only takes a few seconds but the vast number of pages needed makes the total time too large, plus I discovered that about 1% of the requests time out.

The obvious solution is to somehow launch multiple requests in parallel, but straight tcl doesn't support classic parallelism. Before when I faced this problem I used a complicated scheme involving the after command (see TkMapper) but later realized that the callback option to ::http::geturl provided a better solution.

This time I decided to write a package to solve the problem in a simple but efficient manner. The public API is just Add, Launch, Reset, Config and Status. But in the simplest manner you just need Add to provide the url and callback routine for every page you want to download, and the package will do the rest: launch off a set of simultaneous requests, manage timeout errors, launch new requests when others complete and insure the user's callback routine gets called when the request completes.

I've included a rather sexy demo (which takes up more lines of code) that lets you see the package in action and tweak different parameters. Two notes: First, I was able to go from 5 second per page to about 7 pages per second. Second, if I set the timeout too low or parallelism too high I crash tcl with a bad memory read error (Windows 2K 8.4.4).

SSS 2010-2-24 Note that the sexy demo works fine for a bit. Then (I believe) Micro$oft notices you hammering their server and throttles you (fair enough). Then requests start to time out, and about the time you get through the first 30 timeouts, the stack fills up - out of stack space (infinite loop?). I haven't chased the stack overflow down, so it could be demo code, or due to the package. (Windows Tcl/Tk 8.5.2) Not a complaint, just a note - a very sexy demo, and cool code. Thanks.

KPV 2010-2-24 : Interesting, I've used it to download high hundreds of files and didn't get any errors. That was on Windows Server 2003 and on XP. I'll try it on Windows 7 and see what happens.

##+##########################################################################

#

# Parallel Geturl -- package (and demo) that efficiently downloads large

# numbers of web pages while also handling timeout failures. Web requests

# are queued up and a set number are simultaneously fired off. As requests

# complete, new ones of popped off the queue and launched.

# by Keith Vetter, March 5, 2004

package require Tk

package require http

namespace eval PGU {

variable options ;# User tweakable values

variable queue ;# Request queue

variable qhead 1 ;# First empty slot

variable qtail 0 ;# Last in use slot

variable stats ;# Array of statistics

variable wait 0 ;# For vwait

array set options {-degree 50 -timeout 30000 -maxRetries 5}

proc ::PGU::Reset {} {

variable queue

variable stats

variable qhead 1

variable qtail 0

variable wait 0

catch {unset queue}

array set queue {}

array set stats {qlen 0 pending 0 done 0 timeouts 0}

}

::PGU::Reset

}

##+##########################################################################

#

# ::PGU::Config -- allow user to configure some parameters

#

proc ::PGU::Config {args} {

variable options

set o [lsort [array names options]]

if {[llength $args] == 0} { ;# Return all results

set result {}

foreach name $o {

lappend result $name $options($name)

}

return $result

}

foreach {flag value} $args { ;# Get one or set some

if {[lsearch $o $flag] == -1} {

return -code error "Unknown option $flag, must be: [join $o ", "]"

}

if {[llength $args] == 1} { ;# Get one config value

return $options($flag)

}

set options($flag) $value ;# Set the config value

}

}

##+##########################################################################

#

# ::PGU::Add -- adds a url and callback command to are request queue

#

proc ::PGU::Add {url cmd {nolaunch 0}} {

variable queue ; variable qtail ; variable stats

set queue([incr qtail]) [list $url $cmd 0]

incr stats(qlen)

DEMO:ShowStatus $qtail queued ;# REMOVE if not demo

if {$nolaunch} return

::PGU::Launch

}

##+##########################################################################

#

# ::PGU::Launch -- launches web requests if we have the capacity

#

proc ::PGU::Launch {} {

variable queue

variable qtail

variable qhead

variable options

variable stats

while {1} {

if {$qtail < $qhead} return ;# Empty queue

if {$stats(pending) >= $options(-degree)} return ;# No slots open

set id $qhead

incr qhead

incr stats(pending)

incr stats(qlen) -1

DEMO:ShowStatus $id pending ;# REMOVE if not demo

set url [lindex $queue($id) 0]

::http::geturl $url -timeout $options(-timeout) \

-command [list ::PGU::_HTTPCommand $id]

}

}

##+##########################################################################

#

# ::PGU::_HTTPCommand -- our geturl callback command that handles

# queue maintenance, timeout retries and user callbacks.

#

proc ::PGU::_HTTPCommand {id token} {

variable queue

variable stats

variable options

variable wait

foreach {url cmd cnt} $queue($id) break

set status [::http::status $token]

if {$status == "timeout"} {

incr stats(timeouts)

incr cnt -1

if {abs($cnt) < $options(-maxRetries)} {

::http::cleanup $token

DEMO:ShowStatus $id timeout ;# REMOVE if not demo

lset queue($id) 2 $cnt ;# Remember retry attempts

::http::geturl $url -timeout $options(-timeout) \

-command [list ::PGU::_HTTPCommand $id]

return

}

DEMO:ShowStatus $id failure ;# REMOVE if not demo

} else {

DEMO:ShowStatus $id done ;# REMOVE if not demo

}

incr stats(pending) -1 ;# One less outstanding request

incr stats(done)

::PGU::Launch ;# Try launching another request

set n [catch {eval $cmd $token} emsg]

if {$n} {puts stderr "ERRORX: $emsg\n"

set ::CMD "$cmd $token"

}

::http::cleanup $token

if {$stats(qlen) == 0 && $stats(pending) == 0} { ;# If done trigger vwait

set wait 1

}

}

##+##########################################################################

#

# ::PGU::Wait -- blocks until all geturl request queue is empty

#

proc ::PGU::Wait {} {

vwait ::PGU::wait

}

##+##########################################################################

#

# ::PGU::Status -- returns some statistics of the current state

#

proc ::PGU::Status {} {

variable stats

return [list $stats(qlen) $stats(pending) $stats(done) $stats(timeouts)]

}

################################################################

################################################################

################################################################

#

# DEMO CODE

#

#

array set colors "queued blue pending yellow done green

timeout orange failure red unused [. cget -bg]"

# Called by PGU code to update squares w/ appropriate status color

proc DEMO:ShowStatus {id status} {

.f.l$id config -bg $::colors($status)

}

# Our callback to the ::http::geturl command

proc HTTPCommand {id token} {

global status

Tick ;# Update statistics

return

# Code to save off the web page data

set fname "maps/${id}_[expr {int(rand() * 1000)}].jpg"

set fout [open $fname "w"]

fconfigure $fout -translation binary

puts -nonewline $fout [::http::data $token]

close $fout

}

# Puts up our (more and more complex) demo GUI

proc DoDisplay {} {

wm title . "Parallel Geturl"

label .j; .j configure -font "[font actual [.j cget -font]] -weight bold"

catch {font delete myBold} ; eval font create myBold [.j cget -font]

frame .f -bd 2 -relief raised

frame .ctrl -bd 2 -relief ridge

frame .key -bd 2 -relief ridge

grid .f .ctrl -row 0 -sticky news

# Draw all the cells

set ID 0

for {set row 0} {$row < 25} {incr row} {

for {set col 0} {$col < 15} {incr col} {

set w .f.l[incr ID]

label $w -width 4 -bd 2 -relief sunken -text $ID -fg gray50

grid $w -row $row -column $col

}

}

# Key section

set cnt 3

label .key.key -text KEY -font myBold -bd 2 -relief raised

grid .key.key - - -row 0 -sticky ew -pady {0 5}

foreach state {unused queued pending done timeout failure} {

label .key.$state -bd 2 -relief ridge -bg $::colors($state) \

-font myBold -text [string totitle $state]

grid .key.$state -row [expr {$cnt / 3}] -column [expr {$cnt % 3}] \

-padx 10 -sticky ew

incr cnt

}

.key.queued config -fg white

grid rowconfigure .key 100 -minsize 5

grid columnconfigure .key 1 -weight 1

# Stats section

frame .stats -bd 2 -relief ridge

label .stats.stats -text STATS -font myBold -bd 2 -relief raised

grid .stats.stats - -row 0 -sticky ew

grid columnconfigure .stats 1 -weight 1

foreach w {start duration qlen pending done timeouts} {

set title [string totitle $w]

label .$w -text "$title:" -anchor e -font myBold

label ._$w -textvariable status($w) -anchor w -font myBold -width 9

grid .$w ._$w -in .stats -sticky ew

}

.qlen config -text "Queue"

# Configuration section

frame .config -bd 2 -relief ridge

label .config.config -text CONFIGURATION -font myBold -bd 2 -relief raised

grid .config.config - -row 0 -sticky ew

grid columnconfigure .config 1 -weight 1

label .config.cnt -text "Test Count:" -font myBold -anchor e

scale .config.scnt -orient h -from 1 -to $ID -font myBold -relief ridge \

-variable status(cnt) -command Squares

label .config.degree -text "Parallelism:" -font myBold -anchor e

scale .config.sdegree -orient h -from 1 -to 200 -font myBold \

-relief ridge -variable ::PGU::options(-degree)

label .config.timeout -text Timeout: -font myBold -anchor e

scale .config.stime -orient h -from 1000 -to 60000 -font myBold \

-relief ridge -variable ::PGU::options(-timeout) -resolution 1000

grid .config.cnt .config.scnt -sticky ew

grid .config.degree .config.sdegree -sticky ew

grid .config.timeout .config.stime -sticky ew

label .finish -fg red -textvariable status(finish) \

-font "[font actual myBold] -size 18"

frame .frun -bd 2 -relief sunken -padx 10 -pady 10

button .run -text "Run Demo" -font myBold -command RunDemo

grid .key -in .ctrl -sticky new

grid .stats -in .ctrl -sticky new -pady 5

grid .config -in .ctrl -sticky sew

grid rowconfigure .ctrl 50 -weight 1

grid .finish -in .ctrl -row 60

grid .frun -in .ctrl -pady 10

grid .run -in .frun

button .about -text "?" -font myBold -command About

place .about -in .ctrl -relx 1.0 -rely 1.0 -anchor se

bind all <Key-F2> {console show}

}

proc RunDemo {{n {}}} {

global status

if {$n == {}} {set n $status(cnt)}

set status(milli) [clock clicks -milliseconds]

set status(start) [clock format [clock seconds] -format %T]

foreach w {duration qlen pending done timeouts} {set status($w) 0}

set status(finish) ""

Busy 1

# Start the downloads

::PGU::Reset

Tick

for {set i 0} {$i < $n} {incr i} {

set url [GenerateURL $i]

::PGU::Add $url [list HTTPCommand $i] 1

}

::PGU::Launch

::PGU::Wait

set status(finish) "DONE"

Busy 0

}

proc Tick {} {

global status

after cancel $status(aid,tick)

if {$status(finish) != ""} return

set milli [expr {[clock clicks -milliseconds] - $status(milli)}]

set status(duration) [expr {round($milli / 100) / 10.0}]

foreach {status(qlen) status(pending) status(done) status(timeouts)} \

[::PGU::Status] break

set status(aid,tick) [after 1000 Tick]

}

proc Busy {onoff} {

set state [expr {$onoff ? "disabled" : "normal"}]

set fg [expr {$onoff ? "gray50" : "black"}]

foreach w [concat [winfo child .config] .run] {

if {$w == ".config.config"} continue

$w config -state $state -fg $fg

}

}

proc Squares {n} {

for {set i 1} {[winfo exists .f.l$i]} {incr i} {

.f.l$i config -bg $::colors(unused) \

-fg [expr {$i > $n ? "gray50" : "black"}]

}

}

proc About {} {

set msg "Parallel Geturl\nby Keith Vetter, March 5, 2004\n\n"

append msg "This program demonstrates an efficient way to\n"

append msg "download a large number of web pages while also\n"

append msg "handling timeout failures. Web requests are queued\n"

append msg "up and a set number of them are simultaneously\n"

append msg "launched. As request complete, new ones are\n"

append msg "popped off the queue and fired."

tk_messageBox -message $msg -title "About Parallel Geturl"

}

# Creates a url to fetch a semi random page from the Terraserver

proc GenerateURL {id} {

set y [expr {5000 + int(rand() * 1000)}] ;# Avoid caching affects

set x [expr {400 + $id}]

set url "http://terraserver.microsoft.com/tile.ashx?T=2&S=12&W=0&Z=17"

append url "&Y=$y&X=$x"

return $url

}

set status(aid,tick) 0

set status(cnt) 100

DoDisplayThe TIL contains a rather similar package called massgeturl. The package is a bit more advanced. For example, it handles redirects and can control the number of outbound connections for sites. To do this it has a simplistic queuing system and URLs to be fetched have priorities to control which one will be fetched next when being popped out of the queue. EF

uniquename 2013aug19

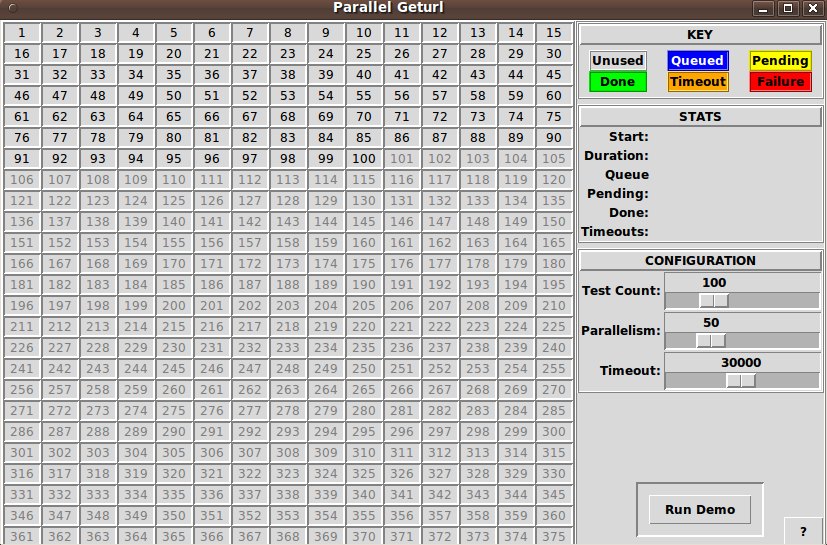

For the readers who do not have the time/facilities/whatever to setup the code above and then execute it, here is an image of the GUI that this code produces.

I made two changes to the code above to be able to display the GUI:

- since I do not have the 'http' package installed, I commented out the check for that package

- I added the following statement to the top of the code (to run on a Linux distro)

#!/usr/bin/wish