VNNlab

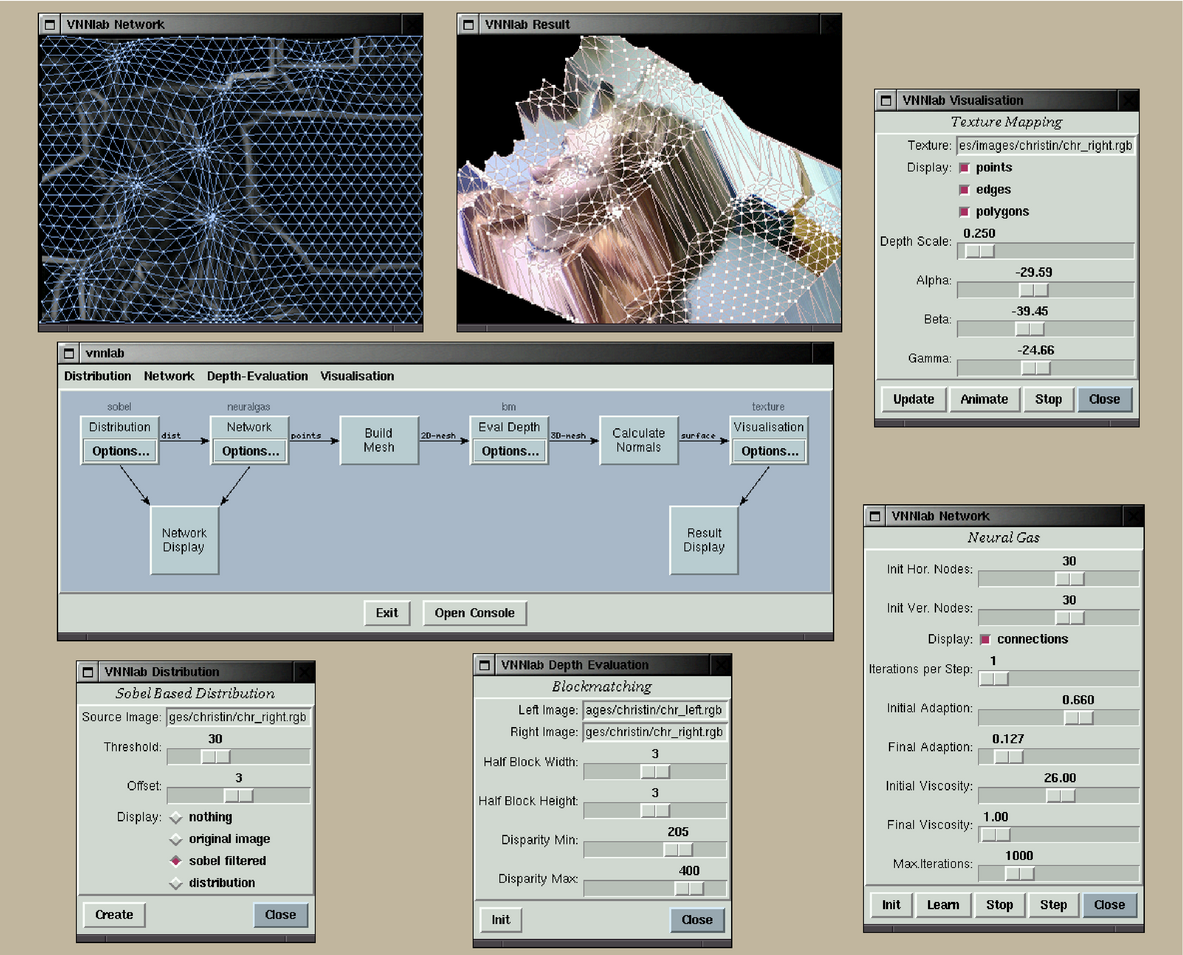

VNNlab is an experimentation platform to apply neural network techniques to the domain of stereo analysis to create a 3D polygon model from a pair of stereo images.

The estimation of the depth of a point on one picture is based on the horizontal displacement of its corresponding point in the other picture. When automating such an estimation, the biggest problem is to actually find "corresponding" points in both images. A lot of pixels in natural pictures are worthless for such an estimation, for example pixels of planar surfaces. The interesting pixels are determined by filtering one source image using an edge-detection filter (Sobel) and applying a threshold. A vector-based neural network learn the interesting points. Different algorithms can be used for the learning process, for example k-means, learning vector quantization, neural gas, and simulated annealing. The network vertices representing the interesting points of the source image are used to create a contiguous mesh using delaunay triangulation. Standard algorithms such as intensity-based (block matching, Shirai, cross correlation) and color-based methods are implemented. The best result for natural images however, produced a custom algorithm that uses local texture-parameters (the fast-fourier-transformation coefficients of small blocks). Finally, VNNlab visualizes the result via OpenGL (2D mesh, 3D mesh, 3D textured mesh).

VNNlab features a plug-in architecture. algorithms can be exchanged in runtime and the parameters of algorithms can be modified live while the algorithm is working.