SQLite

SQLite is a free software library that implements a self-contained, serverless, zero-configuration, transactional SQL database engine. It is the most widely deployed SQL database engine in the world. The source code for SQLite is in the public domain. SQLite started out as a Tcl extension, and is now a built-in to the standard Tcl distribution.

Attributes

- current version

- 3.45.0

- release time

- 2024-01-15

- contact

- D. Richard Hipp

Resources

Documentation

- The SQLite Database Engine , Michael Owens, 2004-03

Press

- PalmSource Releases ALP Component To Open Source Community , 2006-08-14

- Discusses libsqlfs, an add-on to SQLite created as part of the ACCESS Linux Platform (ALP).

- Size isn't everything for the modest creator of SQLite , Tim Anderson, 2007-06-20

The Untold Story of SQLite , Podcast Corecursive#66 by Adam Gordon Bell, 2021-07-02.

Collection of insane and fun facts about SQLite , 2024-12-30.

Books

The Definitive Guide to SQLite , by Mike Owens, Apress, 2006, ISBN 1-59059-673-0, 464 pages

SQLite (Developer's Library) , by Chris Newman

SQLite , by Naoki Nishizawa

Tcl Bindings

SQLite was designed from the beginning to be used with Tcl. Tcl bindings have been in the SQLite core since before version 1.0 and almost half of the SQLite source code base consists of regression test scripts written in Tcl. SQLite wants to be programmed in Tcl.

- tclsqlite

- A 100% upwards-compatible variant of SQLite, bundled as a Tcl extension. Hosted on Sourceforge as cyqlite . This is distributed as part of the Tcl Core.

Interactive Interfaces

- sqlitetablelist

- A GUI for designing, viewing and editing for SQLite3 databases.

Tools

- An SQL database backed VFS

- A tclvfs for SQLite.

- GEB, by Gerry Snyder

- A general-purpose SQLite GUI and database manipulation tool. It includes facilities for storing, examining, searching, editing, and executing text stored in the tclcode table of the main and any attached DB file.

What: sqliteodbc Where: http://www.ch-werner.de/sqliteodbc/ Description: ODBC driver for SQLite. Currently at version 0.66. Updated: 05/2006 Contact: See web site

What: nsdbilite Description: Native SQLite driver for the nsdbi database interface. Updated: 06/2008

What: javasqlite Where: http://www.ch-werner.de/javasqlite/ Description: Java JDBC wrapper for sqlite. Currently at version 20050608. Updated: 05/2006 Contact: See web site

What: wisql Where: http://www.ucolick.org/%7Ede/ http://www.ucolick.org/cgi-bin/Tcl/wisql.cgi ftp://ftp.ucolick.org/pub/UCODB/wisql5.1.tar.gz Description: UCO wisql is an "upscale" version of Tom Poindexter's wisqlite according to the WWW page above. Requires Tcl/Tk/tclX/sybtcl and tkbind. Many other Sybase related tools are found at the above site include a forms GUI interface to Sybase called fosql. Updated: 02/2000 Contact: mailto:[email protected] (De Clarke)

Acacio Cruz Compiled 3.3.13 Tcl-bindings for Mac OS X 10.4 PowerPC. Available here: http://www.acacio.com/tcl/libsqlite3.3.13-macosx10.4-PPC.tar.gz Latest build: macosx 10.5, Intel: http://www.acacio.com/tcl/tclsqlite3.6.7-macosx10.5-tcl8.6-x86.tgz

tclsqlite 3.0.8 extension library for Linux on an iPaq or Zaurus: http://www.sr-tech.com/testing/libtclsqlite3.so

There is a complete sqlite extension for Jim with a nice command based interface. See Jim Extensions for more information.

Tclkit Kitgen Build System contain sqlite 3.5.9 and tksqlite 0.5.6 as library and starpack.

rqlite : Replicating SQLite using Raft Consensus (blog post - discussion )

Users

- Firefox 3.0

- Uses SQLite for storage of browsing history, bookmarks, cookies, favicons etc.

- TWS

- Uses SQLite as its database engine.

See Also

- [L1 ]

- See also SQLite FTS3 and FTS4 Extensions documentation for SQLite.

- SQLite Optimization

- Tips for getting more performance out of SQLite.

- sqlite_master

- The table that holds the database schema in SQLite.

- SQlite3 type affinity , by aspect

- Demonstrates how type affinity affects value conversions.

- Type affinity documentation

- SQLite's own description of how types are handled. The results of the previous item should agree with this.

- Creating an Invalid Tcl_Obj via SQLite

- Casting a blob to text can result in a Tcl_Obj with an invalid string representation.

Description

An SQL database (written in C but with Tcl extension/bindings as part of the source tree and with Tcl used for most testing). Author is D. Richard Hipp. Features include:

- Atomic, Consistent, Isolated, and Durable ACID transactions

- Zero-configuration - there is no setup or administration needed

- Simple usage from Tcl

- A complete database is stored as a single cross-platform disk file

- Supports huge databases (tebibytes) and huge strings and blobs (gibibytes)

- Small footprint ("lean", not "crippled")

Supports a large subset of SQL92, and comes with bindings for Tcl/Tk. The Tcl binding is stubs-enabled so it can be wrapped in a starkit if need be.

SQLite is not intended to be a replacement for client/server enterprise database engines like Oracle, MySQL, or PostgreSQL. SQLite is intended to replace the use of open to write and create flat files in proprietary formats used to store miscellaneous information.

SQLite is especially useful in Tcl/Tk programs as an application file format. When the user selects the File->Open menu option, the application can connect to an SQLite database instead of reading and parsing a file. This makes the file format cross-platform, gives the application ACID I/O semantics and rollback capabilities, and provides a powerful query mechanism for accessing and updating application data.

The C-code portion of SQLite is used extensively in commercial products and is likely the single most widely deployed SQL database engine. Despite the fact that most people use only the C-code portions of SQLite, the author has stated that he considers SQLite to be a Tcl extension, not a C library that happens to have Tcl bindings. The author points out the well over half of SQLite (specifically all of the test scripts) are written in Tcl and that SQLite would never have been possible without Tcl.

Version 3.0 provides a lot of important new and improved functionality, including user-defined collating, limited table-level locking, 64-bit rowids, "manifest typing", proper UTF-{8,16} support, new concurrency possibilities, and SQLite-side dereferencing of Tcl variables (see below).

Differences from Other Databases

SQLite has omitted some features that a typical database implements. (AMG: Though, this list has been gradually getting shorter.)

By default, SQLite doesn't constrain referential integrity. It parses foreign keys and builds an internal data structure to describe them, but normally stops there. An equivalent constraint enforcement can be achieved using triggers. CHECK constraints are supported as of version 3.3.0 (the pragma 'PRAGMA foreign_key_list(table-name)' provides introspection on the foreign keys). With SQLite 3.6.19 and up, FOREIGN KEY constraint enforcement can be enabled via a PRAGMA .

SQLite uses manifest typing, which means that a type is associated with each value, rather than with the column. SQLite allows to store any value of any datatype into any column regardless of the declared type of that column. A column may have a type affinity, which SQLite may use to decide whether to coerce a value to a different type for a particular operation. The exception is that each value in an INTEGER PRIMARY KEY column is an integer.

Configuration under Windows and MacOS X

For Windows, the FAQ advises for the former: "Create a subdirectory named tclsqlite into the lib directory of your Tcl installation (usually C:\tcl\lib) and put there the tclsqlite.dll library. Then, put the line:

load tclsqlite

at the beginning of your code, and you can then use the sqlite command in your tcl script."

Another way: Get a copy of tclsqlite3.dll, save it to e.g. \winnt\system32\ and then, in Tcl, do:

load tclsqlite3.dll Sqlite3

Anther variation is to to put tclsqlite.dll in c:\tcl\lib\sqlite and create a pkgIndex.tcl file that says something like:

package ifneeded sqlite 2.0 [list load [file join $dir \

tclsqlite[info sharedlibextension]]]Then, package require sqlite in the script.

Or, for SQLite 3:

package ifneeded sqlite3 3.0 [list load [file join $dir \

tclsqlite3[info sharedlibextension]] tclsqlite3]Then, package require sqlite3 in the script.

There is also a simpler way. The tclsqlite.dll file can be in a directory totally unrelated to Tcl. The directory only needs to be in the $PATH. Then just load tclsqlite.dll and it works (LES).

Usage examples

The best place to start is The Tcl interface to the SQLite library .

SQLite provides some runtime introspection. For example, given a database, unknown.db:

# sqlite unknown.db ... sqlite> .help ... sqlite> .tables ... sqlite> .schema sqlite_master ... sqlite> select * from sqlite_master; ...

and so on. Notice that sqlite_master here is a keyword that names the table that holds the schema for unknown.db.

kostix: SQLite creates its command for the opened database in the global namespace, i.e.:

% namespace eval ::foo {

sqlite3 db mydbfile

}

% info comm ::foo::*

%

% info comm ::db*

% ::db

o you must fully qualify the first argument to '''sqlite''' command if you want the database handling command to be created in a different namespace, like this:

% namespace eval ::foo {

sqlite3 [namespace current]::db mydbfile

}

% info comm ::foo::*

% ::foo::db( Side note: the hexadecimal number that returns from the sqlite3 command is the value of the sqlite3 pointer. It is used for testing and will likely be replaced by something less kludgy in the future. So don't use it.)

Bind Parameters

In the Tcl interface to SQLite 3.0, in an SQL statement, an expression or literal value that begins with $, :, or @, is a bind parameter (or just parameter), and the value of the corresponding Tcl variable is used in its place:

db eval {INSERT INTO table1 VALUES($name,$vx)}or

db eval {INSERT INTO table1 VALUES(:name,:vx)}In the previous examples, SQLite replaces the parameters with the values of the corresponding Tcl variables.

Gotcha: If a corresponding Tcl variable doesn't exist for some bind parameter, the parameter takes on the value of NULL. This can result in unexpected behaviour in a program. In the following example, the author may have inadvertantly failed to create a variable name role prior to executing the query:

proc doquery {} {

set name Paul

set record [db eval {

select * from actors where name == :name and role == :role}]

}The bind_fallback method can be used to produce an error intead, or to handle the situation in some other way. See the documentation.

Bind parameters provide an important safeguard against injection attackts. If the command was written such that Tcl did the variable substitution, the program would be vulnerable:

#warning! exploitable code ahead! db eval "INSERT INTO table1 VALUES($name,$vx)"

$name or $vx could be constructed in such a way that SQLite would interpret their values as part of the SQL statement, and execute it.

A parameter can be used to access Tcl array variable:

db eval {insert into table1 values ($data(one))}However, another parameter may not be used as the index part of the variable name:

set item one

set data(one) {a number}

# This syntax is not supported

db eval {insert into table1 values ($data($item))}A bind parameter can only be used where a literal value or an expression is allowed. Table names or field names are not literal values or expressions, so a bind parameter may not be used in their stead. The following is wrong:

set table project

set field projectName

# $table and $field occur at points where a bind parameter is not allowed

db eval {SELECT * FROM $table WHERE $field LIKE $searchterm)When a table or field name is held in a Tcl variable, the way to use such a value is by letting Tcl do the substitution, but in that case the program should first make sure that the value is a valid table or field name:

db eval [format {SELECT * FROM %s WHERE %s LIKE $searchterm} $table $field]Another way to write the same command would be:

db eval "SELECT * FROM $table WHERE $field LIKE :searchterm"

In the following example, whitespace separates $table and (a,b,c), so that it doesn't look like an array variable to Tcl:

db eval "INSERT INTO $table (a,b,c) VALUES (:tclVarA, :tclVarB, :tclVarC)"

Another way to do it would be:

db eval "INSERT INTO ${table}(a,b,c) VALUES (:tclVarA, :tclVarB, :tclVarC)"Variables Created by Eval

The eval command of the Tcl interface to SQLite optionally takes a Tcl script as an argument, in which case a variable is created for each result column:

db eval {select id from table1} {puts $id}The following command has the potential to create unexpected/undesired variables:

db eval {select * from table1} {}To avoid name conflicts the previous example might cause, tell eval the name of an array variable to populate instead:

db eval {select * from table1} record {parray record}One variable in the array, *, is a list of the names of result columns from the query:

db eval {select * from table1} record {

puts [list {the columns in table1 are} $record(*)]

break

}When creating a variable, The Tcl interface to SQLite uses just a simple column name, not any table name that may prefix it. In the following statement, $name contains either the value of s.name or f.name, and there's no way to determine which it contains:

db eval {select s.name, f.parent, f.name

from files f, shares s

where s.name = f.share

...

} {

puts $name

}To avoid this problem, assign a unique column alias to each ambiguous column name:

db eval {

select s.name as sname, f.parent as fparent, f.name as fname

...

} {

puts [list $sname $fparent, $fname]

}

SQLite functions

TR MJ: Apart from the built-in functions in SQLite, you can make your own. SQLite functions can give you capabilities similar to for example Oracle's PL/SQL. So how difficult is it to add regexp support? Easy. Just do:

$dbhandle function regexp regexp

and then you have it! Usage like this:

$dbhandle eval {select column1,column2 from table where column2 regexp '^something\s.*'}See SQL As Understood by SQLite for reference.

Note, that the Tcl command given with $dbhandle function ... can only be a single word, not a script with several statements. E.g., if you want to do something with the arguments before handing them over to the Tcl command, it must be done like this:

sqlite db {}

# Tcl proc we want to use (assuming its implementation cannot be changed for whatever reason), it takes 3 single arguments:

proc tclcalc {a b c} {expr {$a+2*$b + 3*$c}}

# we need a wrapper in order to call it from sqlite:

proc tclcalcwrapper {args} {tclcalc {*}$args}

# now, the args get processed correctly for the db function to work:

db function calc tclcalcwrapper

# use the function:

db eval "select calc(1,2,3)"Here is another simple example, that adds a function to double a number:

package require sqlite3

sqlite3 db {}

# create a Tcl procedure, that will get called when the db function is invoked

proc double {num} {

return [expr {$num*2}]

}

# register this procedure to the SQL engine

db function double double

# create a test table and fill it with some data

db eval {create table test(i integer, i2 integer)}

for {set i 0} {$i < 100} {incr i} {

db eval {insert into test values($i,NULL)}

}

# show the records

db eval {SELECT * from test} {

puts "$i: $i2"

}

# update the values using the registered procedure

# this is a contrived example, normally you would just use i2=2*i

db eval {UPDATE test SET i2=double(i) WHERE i2 isnull}

db eval {SELECT * from test} {

puts "$i: $i2"

}AMG: I prefer:

$dbhandle function regexp -argcount 2 -deterministic {regexp --}This allows the regular expression to begin with a minus sign, and it can have better performance (due to -deterministic) and better diagnostics (due to -argcount 2).

Useful code snippets

RZ: read/write binary data

package req sqlite3

sqlite3 db :memory:

db eval {create table img(b blob)}

# save data

set fd [open test.png r]

fconfigure $fd -translation binary

set c1 [read $fd]

close $fd

db eval {insert into img values(@c1)}

set r [db last_insert_rowid]

# read data

set fd [db incrblob img b $r]

fconfigure $fd -translation binary

set c2 [read $fd]

close $fdUS: This recipe is for a hot backup of a sqlite database file (confirmed by drh on the sqlite mailing list) and translated to a short Tcl code snippet:

# Connect to the database

sqlite3 db $dbfile

# Lock the database, copy and commit or rollback

if {[catch {db transaction immediate {file copy $dbfile ${dbfile}.bak}} res]} {

puts "Backup failed: $res"

} else {

puts "Backup succeeded"

}JOB: The above code works, but raises an error if the backup file already exists, which means one can run the command exactly once. Therfore I would rather suggest to change the statement to:

if {[catch {db3 transaction immediate {file copy -force -- $dbfile ${dbfile}.bak}} res]} {But wait, nowadays we can use the backup API, which is the prefered method - the database does not get locked while running the backup:

if {[catch {db3 backup $backupfile} res]} {AMG: How does this compare to database backup ?

Googie 2011-10-25: I believe both ways realize pretty much the same thing. They both keep write-lock during operation, but other connections can read the database. The difference is when you do a backup of in-memory database (a dump of "temp" database to file), or backup of ATTACHed database - these are supported by database backup, but not by simple file copying.

sisusimple 2011-10-26: The above backup code snippet was developed before the sqlite backup interface/api was developed. Both can still be used. The advantage of the backup interface is that the source db needs not to be locked for the complete time of the backup. See also Using the SQLite Online Backup API .

Here is a work-around to abort a sqlite-operation:

sqlite3 db $dbFileName

# process events during query

db progress 100 {update}

# this forces a "callback requested query abort"-error to be generated if button is pressed while query runs

button .cancelBtn -text "cancel query" -command {db progress 100 {set notExistingVar 1}}

pack .cancelBtn

# start the query

db eval $veryLongRunningQueryIf you catch the "callback ..."-error you can return an empty string or handle it in another way. Don't forget to call

db progress 100 {update}once more, after aborting, otherwise all later queries will abort as well.

The above is even easier using the new "interrupt" method to the SQLite database object. See http://www.sqlite.org/cvstrac/tktview?tn=1889 for additional information.

JOB: Days between dates code snipped. How many days have been passed by, since ... ? Type the following:

SELECT julianday ( current_date ) - julianday( DATE('2011-09-29'));Note: In sqlite is not necessary to specify "select ... from dual".

AMG: Here's a Tcl 8.6 proc to run and pretty-print a query with everything aligned nicely:

proc query {db query} {

$db eval $query out {

if {![info exists widths]} {

lappend grid $out(*)

set widths [lmap column $out(*) {string length $column}]

}

lappend grid [set row [lmap column $out(*) {set out($column)}]]

set widths [lmap width $widths value $row {expr {

max($width, [string length $value])

}}]

}

set format %-[join $widths "s %-"]s

foreach row $grid {

lappend display [format $format {*}$row]

}

join $display \n

}Tcl variables as value-lists

AMG: Feature request! I'd like to use lists stored in Tcl variables as SQL value-lists. Of course there needs to be a way to differentiate between the Tcl variable expanding to a single value versus a list of values, so I will borrow {*}, but by no means am I married to this particular notation! Here are some examples of what I'd like to do:

# Define data.

db eval {create table rolodex (name, number)}

set insert {{{Andy Goth} 222-333-5555}

{{Chris Goth} 444-777-5555}

{{Dick Goth} 999-888-5555}}

set delete {{Andy Goth} {Chris Goth}}

# Insert some rows.

foreach entry $insert {

db eval {insert into rolodex values({*}$entry)}

}

# Delete some rows.

db eval {delete from rolodex where name in ({*}$delete)}Using current SQLite, the above is coded:

# Insert some rows.

foreach entry $insert {

lassign $entry name number

db eval {insert into rolodex values($name, $number)}

}

# Delete some rows.

foreach name $delete {

db eval {delete from rolodex where name = $name}

}which requires more local variables, more effort on Tcl's part, and more SQLite invocations.

I'm not sure if it's necessary to support combinations like the following:

# More data.

set delete2 {Keith Vetter}

set delete3 {{Larry Virden} {Richard Suchenwirth}}

# Delete lots of rows. (Pretend I inserted them earlier.)

db eval {delete from rolodex where name in ({*}$delete, $delete2, {*}$delete3, "Jean-Claude Wippler")}AMG, update:

- After thinking about it some more, I have decided that it's best not to support these arbitrary expansion combinations. This would needlessly bloat SQLite, as it is reasonable to use Tcl scripting to assemble the list.

- If it's not legal to mix expansion-to-multiple-values with anything else, then it's needlessly* verbose to say ({*}$var). Instead just say $var, without parentheses.

* Today's Wiki edit is brought to you by the word "needlessly" and the number pi!

Here's an example showing (1) and (2) above:

set victims [list {*}$delete $delete2 {*}$delete3 {Jean-Claude Wippler}]

db eval {delete from rolodex where name in $victims}See, no parentheses. If the query had instead been written {delete from rolodex where name in ($victims)}, it likely would have had no effect, as it would be equivalent to {delete from rolodex where name = $victims}.

I can only find two places where SQLite supports value-lists:

- The "IN" and "NOT IN" expressions.

- The "VALUES" clause of "INSERT" and its alias "REPLACE".

And both places I think would greatly benefit from the ability to expand a Tcl variable to an entire value-list rather than a single value.

ZB 2008-09-30: "After thinking about it some more" I'm pretty sure, that ability to use "value list" during INSERT would be very helpful. Currently one has to "manually" change appropriate place in script, when the amount of values has been changed (how can you dynamically resize "values($name, $number)" in your "Insert some rows" example?).

So, it's not necessary to describe every new - really useful - feature as "bloat".

AMG: It is bloat because it duplicates existing functionality. Tcl excels at list processing, so why not let Tcl do its job? I'm not saying that I have second thoughts about the whole feature request, only about the support for {*}. Instead, all I ask is for the ability to supply an entire value list from a single Tcl object, rather than being required to supply each element of the list from a separate Tcl object. The syntax I propose is to drop the parentheses when the entire value list is to be taken from a single Tcl object.

AMG: I noticed that Dossy requested a feature very similar (possibly identical) to my value-list proposal. Search for [email protected] on the sqlite - Unsupported Sql page.

Performance Benefit of Explicit Transactions

AMG: Today I spent some time learning about how SQLite guarantees atomicity in the face of software, filesystem, and power failures. It occurred to me that it takes an awful lot of work to do it right. Next I realized that all this setup and tear-down work has to be done for each transaction, not for each statement.

This explains why a simple database generation utility I wrote is so slow, even though it does nothing more than build a database from scratch to store precomputed data. (It's automatically generated C code, by the way.) It doesn't explicitly use transactions, so each statement is implicitly a separate transaction. After learning about the overhead involved in each transaction, I decided to add BEGIN and COMMIT to my utility. Here's the result:

| Database generation time without BEGIN/COMMIT | 7.347 seconds |

| Database generation time with BEGIN/COMMIT | 0.070 seconds |

Yup, it's now 100 times faster!

The moral of the story: Always use explicit transactions when executing more than one statement at a time, even if your specific application would not benefit from atomicity.

Scott Beasley: Transactions are a good rule for all multi-sql runs for most any dB, not just Sqlite. I bulk load 2-6million blobs a night and Transactions are the only way to get it done in a reasonable amount of time. Another performance helper is to make the journal file persistent, for long running insert/update jobs.

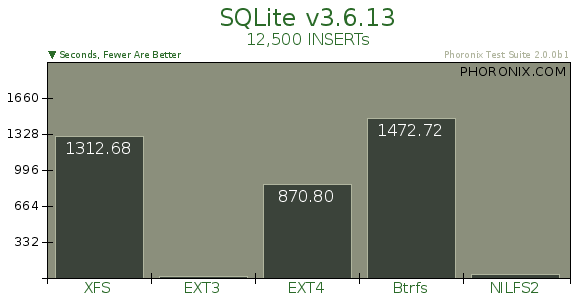

Performance differences on various filesystems

2009-06-29: According to some tests (http://www.phoronix.com/scan.php?page=article&item=ext4_btrfs_nilfs2&num=2 ) there are shocking differences on various filesystems, which should be taken into consideration:

Precompiled tcl-sqlite

AMG: Where are the precompiled binaries for tcl-sqlite? They used to be available on the download page [L2 ], but they're not there now. Anyway, I just compiled an x86 Windows binary of tcl-sqlite 3.7.4; it can be found here: [L3 ]. Here's how you load it:

load sqlite374.dll Sqlite3

DDG: A starkit for version 3.7.4 containing builds for Linux (x86 and x86_64), Micorosft Windows (x86) and OS-X Darwin (ppc and x86). Also additional math, string and aggregate functions are available: Math: acos, asin, atan, atn2, atan2, acosh, asinh, atanh, difference, degrees, radians, cos, sin, tan, cot, cosh, sinh, tanh, coth, exp, log, log10, power, sign, sqrt, square, ceil, floor, pi. String: replicate, charindex, leftstr, rightstr, ltrim, rtrim, trim, replace, reverse, proper, padl, padr, padc, strfilter. Aggregate: stdev, variance, mode, median, lower_quartile, upper_quartile. [L4 ]

I updated the starkit to version 3.7.10 and added as well a few more sqlite functions md5, md5_crypt, format (like tcl's format command), cflormat (clock format), uuid (from the tcllib package uuid). The download page is: https://bitbucket.org/mittelmark/tcl-code/downloads/ the starkit should run on all flavours of OSX- Linux and Win32 from tclkit 8.4, 8.5, 8.6.

A sample session on Windows:

$ /c/ActiveTcl8.4.13/bin/tclkitsh856.exe

% source sqlite-3.7.4.kit

% package require sqlite3

3.7.4

% sqlite3 sql sample.db

{}

% sql eval "create table test (col1,col2)"

% sql eval "insert into test (col1,col2) values (2,3)"

% sql eval "insert into test (col1,col2) values (3,4)"

% sql eval "insert into test (col1,col2) values (10,1)"

% sql eval "select median(col1),stdev(col1),sin(col2),upper_quartile(col2) from test"

3 4.358898943540674 0.8414709848078965 4

% exitColumn names for an empty table

AMG: I can get the names of the columns using the * element of the array produced by $db eval, but what do I do when the table is empty?

Googie: You can use following before evaluating actual select from table:

db eval {PRAGMA table_info('table_name');} row {

# Here use $row(name) as column name

}AMG: Thanks, that works great! I was sure that if anyone knew the answer, it would be you. ;^) Now, it would be Really Cool to have an constraint_info() that can also tell us all the constraints on a table, although this could get really fancy and tricky to design properly. But if done right, maybe you can avoid having to parse SQL in SQLiteStudio.

Googie: It's not that simple subject, believe me. But this is not the place to discuss it.

AMG: table_info doesn't work all that well when the table's in an attached database. In 3.7.7.1, it's a syntax error to prefix the table name with the database name in the argument to table_info. If the table name is unique across all attached databases and the main database, just leave the database part out and you're fine. But if there's a conflict, I see no alternative to creating a separate SQLite database connection object with the database containing the table as its main (and only) database.

Googie 2011-08-08: You still can use table_info() pragma, but you need to do it (the pragma, not its argument) on specific database:

PRAGMA attached_db_name.table_info('table_name');Pragma always works on its local database, including table name that you pass in argument, so you have to execute whole pragma on desired database. Note, that following diagram [L5 ] of PRAGMA syntax represents exactly what I just described.

Troubleshooting

Unable to open database error

Sqlite3 data folder and database must be rw accessible to the web server user. Sqlite3 may also need to create temporary files in the data folder during transactions.

The following works on a Slackware 10.2 install.

- Look in your web server's config files (/etc/apache/httpd.conf) for the default user/group.

- Make both the Sqlite3 folder and database file rw for this user.

- Better yet, move the Sqlite3 database file to its own folder in the htdocs subtree before you adjust the ownership.

Note that the sqlite data file must also be on a real file system. Thus, if your starkit/starpack application needs to use sqlite, the database is going to have to be copied out to a real filesystem before use. If the database also needs to be written, then it is going to be tricky, because I seem to recall that on some (most? all?) systems, the starpack that is running can't be modified...

Bus Error (coredump)

I have this script on a SPARC Solaris 9 system.

package require sqlite3

sqlite3 db1 /tmp/testdb

db1 eval {CREATE TABLE geom1(rect_id int, x0 real, y0 real, x1 real, y1 real)}

db1 eval {INSERT INTO geom1 VALUES(1, 0.0, 0.0, 20.1, 3.1415)}

db1 eval {INSERT INTO geom1 VALUES(2, -10.0, -20.0, 200.123, -0.1234)}

db1 eval {INSERT INTO geom1 VALUES(3, -100.0, -200.0, 300.0, 400.0)}

set x [db1 eval {SELECT * from geom1 ORDER BY rect_id}]

puts $x

db1 closeWhen I attempt to run it with ActiveTcl 8.5.4, I get a Bus Error(coredump) when attempting to perform the CREATE TABLE.

Does anyone know what might cause this sort of problem?

LV: While I don't know what causes this problem, if I download the sqlite3 source code, build and install it on my machine, then use the version I build and install, the script in question runs to completion. When I try either ActiveTcl 8.4.19 or 8.5.4 I get the bus error.

After exchanging emails with ActiveState support staff, they provided a fresh build for activetcl 8.4.19 and sqlite3. With that, the core dump did not occur. If you encounter a similar issue using ActiveTcl, submit a bug report providing details about your platform and the version of ActiveTcl you are using with a small coherent example that demonstrates the problem.

DKF: Also note that this is the wrong place to report bugs in sqlite or ActiveState's build of it since there's no guarantee that anyone who can fix things will watch this page.

LV: Certainly I agree. I was asking a question here first because I thought the problem might have been my usage of sqlite3. After I found more information that confirmed that my usage wasn't wrong, I submitted the bug to ActiveState and worked out a resolution with them.

$db incrblob and asynchronous chan copy

AMG: I can't seem to get asynchronous chan copy to work with $db incrblob. Synchronous works just fine, but when I do asynchronous, the copy never starts. I'm copying from the incrblob to a network socket. (Someday I might also copy from a network socket to the incrblob, but this is doubtful.) I tried an asynchronous copy from the incrblob to a local file, and that hung too. No, I'm not forgetting to enter the event loop. ;^) The copy code I've written works fine when the source channel is an on-disk file, just not when it's an incrblob. Is this an SQLite bug, a design limitation, or a problem in my code?

AMG: At the Eighteenth Annual Tcl/Tk Conference (2011), AK, DRH, and I had a nice discussion concerning this issue, and we identified a potential fix. AK's memchan provides an example of how to support asynchronous chan copy.

SQLite, Web SQL, and IndexedDB

LV: Firefox beta getting new database standard , Stephen Shankland, 2011-01-13, has a brief mention of SQLite as an underlying technology related to Web SQL , and which has now been rejected in favor of IndexedDB .

Minor edit above, and the following, from EMJ.

HTML5 does databases , Pablo M. Castro, 2010-01-04, describes IndexedDB.

For database people, this is basically an ISAM API with Javascript objects as the record format. You can create indexes to speed up lookups or scans in particular orders. Other than that there is no schema (any clonable Javascript object will do) and no query language.

So not a database at all then, more a way for people to write a lot of rubbish software. Another case of people with limited experience enthusiastically solving the wrong problem. (Apologies for rant.)

LV: I was a bit puzzled by the whole thing - unless IndexedDB has multiple implementations, then it is no better than Web SQL. It looks, to me, like a case of NIH in action.

CliC: Neither Microsoft nor Mozilla "invented" it, according to the CNET article, but rather someone from Oracle (maybe formerly from Sun?). They talk about using it to store gobs of data for working offline, a la Google Gears, so I'm guessing they didn't feel need the data guarantees, and did not want the structure, either, of a real SQL database. (They also mention that Google will replace Gears with IndexedDB in some future Chrome release.)

EMJ: Glad you put "invented" in quotes. It doesn't matter who they are, they haven't invented anything. They've just designed an API to tie Javascript to a fairly low-level storage mechanism that is not a new concept at all (reminds me of Btrieve (1987)). And they do have transactions, but without any structure at all it is not a database (BTW, SQL has nothing to do with whether or not something is a database).

Prepared statement caching

AMG: I had always assumed SQLite cached prepared statements inside a custom Tcl_Obj type. However, is actually not the case, at least not in 3.7.7.1. tclsqlite.c instead maintains its own prepared statement cache mapping from the text of the SQL query to the prepared statement object. This design approach has some interesting properties:

- +: Executing an SQL query won't change the internal representation of a Tcl_Obj, so no other special intrep will be lost. (However, I can't imagine an SQL query being anything other than plain text.)

- +: Prepared statement cache lookups aren't hindered by shimmering. As long as the string representation remains consistent, the prepared statement will be found.

- +: The cache will still work even if the statement is dynamically regenerated, if it winds up having the same string representation each time. I guess you could call this immunity to meta-shimmering.

- -: The size of the prepared statement cache is independent of the number of extant Tcl_Objs containing (textual) SQL statements. If the program cycles through executing (at least) n+1 queries when the size of the cache is only n, the queries will get recompiled every time. See [L6 ] for more information on the cache size, which defaults to 10.

- -: Prepared statements are not automatically flushed from the cache when the Tcl_Objs they were created from are deleted. This could be a plus, if the program depends on dynamic regeneration of statements.

Transactions trying to nest themself

Googie 2011-10-30: I have very weird problem in my application. SQLite reports error on executing BEGIN statement, because it says I'm trying to nest transaction (execute BEGIN inside of previous BEGIN). The exact SQLite error message is: "cannot start a transaction within a transaction".

Well, I added debug info to application and here's a trace of BEGIN/COMMIT/ROLLBACK statements executed:

BEGIN [14:20:45.834 26.10.2011] BEGIN succeed [14:20:45.834 26.10.2011] COMMIT [14:20:45.834 26.10.2011] COMMIT succeed [14:20:46.832 26.10.2011] BEGIN [14:21:33.414 26.10.2011] BEGIN failed [14:21:33.414 26.10.2011]

This trace is done with my wrapper around db eval, and here's a trace made with db trace, so - as I believe - there's no way to skip anything, it logs everything that is executed on database connection, so here is is:

SQLite trace: BEGIN TRANSACTION COMMIT TRANSACTION BEGIN TRANSACTION

db errorcode returns 1, so this is "SQL error or missing database".

It cannot be missing database, because this error happens just after other SQL statement is executed on that database - 2 simple code lines earlier. Also the error is always raised by exactly the same "BEGIN" execution, but there are plenty other places with "BEGIN" and only this one causes problem.

SQL error doesn't seem to be an answer as well. I receive bug report like these twice a week. This must be something obvious :(

I run out of ideas. How can it happen?

Note, that I cannot reproduce it. These all are reports from end-users.

EIAS Violations

PYK 2016-02-06: As aspect has noted in the Tcl Chatroom, SQLite 3 commits an EIAS no-no:

sqlite3 db :memory:

set i [expr 1]

set s [string trim { 1 }]

db eval {select $i < $s} ;#-> 1This behaviour can affect operations, for example, when a Tcl variable having a value of 4 and an internal cached numeric interpretation fails in a query to match a string value of 4 in a column with no affinity.

When a column has an explicit affinity, values are cast to that type as needed in an operation, so the most convenient way to deal with the situation is usually just to specify an affinity for each column.

Another way to wrangle the situation is to bake some casting into the statements:

db eval {select cast($i as numeric) < cast($s as numeric)} ;#-> 0Another approach to working with this SQLite inspection of internal cached interpretations of Tcl values is to make sure all values used in SQL queries have no internal cached interpretation. Although SQLite won't implicitly cast as text a Tcl value with a cached numeric interpretation, it will implicitly cast as numeric a value with no internal cached interpretation:

sqlite3 db :memory:

set i [expr 1]

set s [string trim { 1 }]

string length $i

db eval {select $i < $s} ;#-> 0EMJ 2016-03-04: This is explained in Datatype impedance mismatch between Tcl and SQLite - SQLite has its own view of how to deal with data types, and uses it. The comparison is being done in SQLite, not in Tcl, using Tcl variables directly is a convenience feature for input, carrying no expectation that what happens to the SQLite value must exactly reflect what would happen to the Tcl variables. The referenced page does not explicitly say so, but:

package require sqlite3

sqlite3 db :memory:

set i [expr 1]

set s [string trim { 1 }]

db eval {select @i < @s} ;#=> 0PYK 2016-03-04: In the example you gave, @ tells SQLite to interpret the value as a BLOB, and since both operands have the same type, which in this case is BLOB, and have identical values, the result is 0. I'm not sure whether the operator causes SQLite to additionally cast those two values to numeric, but such a cast wouldn't affect the outcome.

I wish that your statement about SQLite carrying "no expectation that what happens to the SQLite value must exactly reflect what would happen to the Tcl variables" were more true! That description would match SQLite better if it didn't inspect the internal cached interpretation of the Tcl value to see how the last Tcl command used it. When it does that, it relies too much on the interpretation of the value by a third party. It seems to me that there's no good reason to do this. Can anyone provide an example of a case where this behaviour is of value?

Here is another example where inspection of the internal interpretation gets in the way. '4' has type TEXT, and $i has an internal representation of numeric. The query returns no matching records:

db eval {create table t (c1); insert into t values ('4')}

incr i 4

db eval {select rowid from t where c1 == :i}If inspection of the internal interpretation were dropped, $i would be interpreted as a string and a record would be returned.

In the example above, '4' has type TEXT, and because c1 has no column affinity, no conversions are performed. If any sort of type at all is declared for c1, the the outcome may be different. Here is an odd change which causes the query to return matching records:

db eval {create table t (c1 Rumplestiltskin); insert into t values ('4')}

incr i 4

db eval {select rowid from t where c1 == :i}In the example above, '4' has type TEXT, but c1 now has a type affinity of NUMERIC, which causes '4' to be converted to a numeric 4 when it is inserted. Rule 5 states that any declared type that doesn't match previous rules causes the type affinity of the column to be NUMERIC.

EMJ 2016-03-06: In Tcl EIAS. In SQLite, the type affinity documentation applies. EI not AS in SQLite. In an interface, there is a mismatch. SQLite tries to determine the type it has been given by the only way available, which is peeking at the representations. Its only other choice would be to treat everything as a string (!). The perception from the SQLite end seems to be that peeking is better more often than not. In particular, it will normally choose BLOB when that is what you mean. I have put "apparent" back in above because SQLite is not Tcl and you can't expect it to obey EIAS. Also the select $i < $s type of example is pointless, when would you ever really do that?

PYK 2016-03-06: Oh, it's a real EIAS violation all right. SQLite may not be Tcl, but the SQLite Tcl interface is a Tcl extension, and when a Tcl command behaves differently depending on the internal cached interpretation of a value passed to it, that's an EIAS violation. Calling it an "unjustified EIAS violation" would be debatable, but calling it an "EIAS violation" isn't.

The select $i < $s example was chosen (not by me) as the most succinct demonstration that the behaviour is real, but there are plenty of ways to get bitten by this behaviour in the real world. In fact, if you've done any non-trivial code using the Tcl SQLite interface, you've probably bumped into it and found your own workarounds.

Inspecting the cached internal interpretation of the Tcl_Obj is not the only option SQLite has, and my suspicion is that it's not even necessary. It's not true that the only other choice would be to treat everything as a string. It could, like expr, prefer a numeric interpretation of the value. It can also take its cues from the other operands which may have a type affinity or even an outright type. Introspecting the type of an SQL value isn't an EIAS violation. When EIAS violations show through, it's usually scenarios where no operand has a declared type affinity or type, and thus no conversions are performed (and internal representations inspected). Since Tcl values aren't typical SQLite values, they may be given a little more latitude than what is described in Datatypes In SQLite Version 3 . Here is a proposal for dealing with Tcl values:

- Absent an explicit cast or parameter annotation, a Tcl value is interpreted in the same way as for expr, and acquires the corresponding SQLite storage class/data type. Futhermore, it is treated as if it was stored in a column with declared affinity of that class/data type.

Given this rule, the following example would return a matching record. The Tcl value would act like a value from a column with a declared INTEGER affinity, causing a conversion of the value in c1 to INTEGER for the purpose of the comparison:

db eval {create table t (c1); insert into t values ('4')}

incr i 4

db eval {select rowid from t where c1 == :i}Even without the incr, the result would be the same:

db eval {create table t (c1); insert into t values ('4')}

set i 4

db eval {select rowid from t where c1 == :i}Considering again the first contrived example, in which no SQLite values are present:

set i [expr 1]

set s [string trim { 1 }]

db eval {select $i < $s} ;#-> 1Both Tcl values would be interpreted as integer values, and the query would yield a result of 0.

This approach would not prevent SQLite from utilizing the cached internal representation of a Tcl value for performance reasons, and would have semantics more similar to expr. So far, I haven't come up with an example where this proposal produces unexpected results.

The Trouble with EIAS Violations: Another Example

PYK 2016-04-04: I recently diagnosed an issue of a query not returning the expected results, and it came down to this: First, a query produces a value:

set id [db eval {select max(id) + 1 from mytable}]Second, $id is used to INSERT a record into mytable. Third, a query like the following produces no rows:

set id [expr $id]

db eval {select * from mytable where id == $id}Why not? Because db eval produces a list, so for the subsequent INSERT operation, SQLite doesn't have the benefit discovering a numeric type, and just decides to enter the value into the table as a string, even though the declared type of the column is INTEGER. later, when a numeric $id is used to query the table, there's no match.

One way around the problem is to extract the value from the list in the first place:

set id [lindex [db eval {select max(id) + 1 from mytable}] 0]Now, $id is carrying a cached numeric representation, which SQLite capitalizes on.

One possible fix is for SQLite to introspect even further, and if the value is a list containing one item, to cue off the the cached internal representation of that item, but I think such a fix goes down the wrong path. Instead, the scheme for interpreting incoming Tcl values could be revamped, something along the lines of what I've described earlier on this page, to take its direction more strongly from information provided on the SQLite side, and forget about cached representations of incoming values. The value introspection was a tricky hack, but causes more trouble than it's worth.

aspect: Not that it reduces or mitigates the EIAS violation, but your example has a latent bug: db eval is clearly documented as returning a list. When a query returns a single value, db onecolumn should be used.

In the case of a list containing a single number, we currently have list $x eq $x making the example "safe", but that's teetering awfully close to the kind of representation-sloppiness that leads people to string map braces out of lists. At least, it will look odd next to code that has to get a single string out of a table.

Aside to that, db onecolumn is a weird name. I prefer to alias it db scalar.

PYK 2016-05-05: Yes, even though the "this list is always going to be a number, so I'll just use it as one" hack is a perfectly acceptable thing to do in Tcl scriptland, it's also true in general that treating a list as just a string can lead to trouble if one isn't absolutely clear about the operations and values involved. There are plenty of dragons around already without the additional complications of EIAS violations.

EIAS Violation: ByteArray with and without a String Representation

PYK 2020-06-14:

If a value has an internal ByteArray representation but no string representation, SQLite makes the byte sequence in the ByteArray a blob. If the string representation of the Tcl value happens to get generated at some point, the value no longer compares as equal to itself in an SQL statement because SQLite makes a text value from the modified utf-8 representation of the Tcl value rather than making a blob from the ByteArray:

#! /usr/bin/env tclsh

package require sqlite3

sqlite3 db :memory:

db eval {create table t1 (value)}

variable data1 bb

variable data1b [binary format H* $data1]

db eval {

insert into t1 values ($data1b)

}

# this generates a string representation for $data1b

encoding convertto utf-8 $data1b

# now the Tcl value no longer compares as equal to itself

set data2b [db onecolumn {

select value from t1 where t1.value = $data1b

}]

puts [llength $data2b] ;# -> 0SQLite could avoid this situation by encoding a blob value into the utf encoding of the database in order to compare it with a text value. The idea behind the current behaviour may be to make comparison more performant in case the programmer is keeping careful track of blob data represenations, but the current behaviour is not general enough, and it's more trouble than it's worth.

Ideally SQLite would take a cue from Tcl, make "BLOB" an alias for "TEXT", and keep any details of storing binary data more efficiently out of public sight.

additional discussions:

- Tcl bytearray woes , pyk, 2022-02-08

- set v "" = bytearray object? , 2005-06-21

SQLite EIAS Violation Meets Tcl's byte compiler

PYK 2019-09-02:

In the following example, $var3 has no internal representation, and the "c" column has no type affinity, so SQLite doesn't convert $var3 to a numeric type. Therefore, the query returns no results the first time because $var3, when taken as a text value, doesn't match the numeric 3 stored in "c".

The second time, the query does return results because $var3 now has an "int" internal representation, which matches the numeric 3 stored in the database. Where did this internal representation come from? Tcl's bytecode compiler keeps a table of literal values that it encounters, and identical literal values share the same Tcl_ObJ in that table. expr gives that Tcl_Obj an integer internal representation.

#! /bin/env tclsh

proc p1 {} {

set var 3

set query {

select c, typeof(c) from t where c = $var

}

puts [::tcl::unsupported::representation $var]

set res [db eval $query]

puts [list {query result 2} $res]

p2

puts [::tcl::unsupported::representation $var]

set res [db eval $query]

puts [list {query result 2} $res]

}

proc p2 {} {

expr {[] == 3}

}

package require sqlite3

sqlite3 db :memory:

db eval {

create table t (

c

)

; insert into t values (3)

}

p1jmc 2019-09-05

"works for me"

below is a copy from my wish shell :

(jmcua) 1 % package require sqlite3

3.28.0

(jmcua) 2 % sqlite3 db :memory:

(jmcua) 3 % db eval {CREATE TABLE t (c)}

(jmcua) 4 % db eval {INSERT INTO t ( c )

> VALUES ( 3 )}

(jmcua) 5 % set var 3

3

(jmcua) 6 % # a) var substituted by Tcl :

(jmcua) 7 % db eval " SELECT c, typeof(c)

> FROM t

> WHERE c = $var "

3 integer

(jmcua) 8 % # b) var substituted by internals of SQLite (aka "host parameter")

(jmcua) 9 % db eval {SELECT c

> FROM t

> WHERE c = $var }

3

(jmcua) 10 % string length $var

1

(jmcua) 14 % db eval " SELECT c

> FROM t

> WHERE c = $var "

3

(jmcua) 15 % db eval {SELECT c

> FROM t

> WHERE c = $var }

3-jm

PYK 2019-09-08: jmc, your example doesn't do anything which would give the Tcl_Obj behind $var a numeric internal representation. The issue isn't one of Tcl substitution vs. SQLite substitution, but a more subtle one of script-level artifacts like variables and literals sharing the same Tcl_Obj behind the scenes. The odd behaviour has been reproduced by others. It is sensitive to interpreter state, as the Tcl_Obj involved can be used by the compiler for other literals as well, and therefore might pick up a different internal representation. The naughty behaviour should always occur with Tcl 8.6.9 and no additional startup configuration. Otherwise, use tcl::unsupported::representation to ensure that in the constructed scenario $var obtains an internal integer representation and is substituted by SQLite. The SQLite version is not relevant but the Tcl version may be. It is rather unpredictable when this would happen under various versions of Tcl, but given the current strategy of the bytecode compiler it surely can happen under certain circumstances. My opinion is that the bytcode compiler can afford to give each literal its own Tcl_Obj, and considering issues such as this one, it probably should. SQLite, in turn, should stop using the internal representation to make decisions about the value type.

jmc 2019-09-12: (Tcl/Tk version 8.6.9 from Magicsplat.)

Yes PYK, I agree with you (forgot the importance of proc context of execution in your demo, sorry).

My variation with substitution paths (Tcl or SQLite) was for completeness that I couldn't reproduce your demo.

Just trying to clarify :

- SQLite allows column's type to be undefined. But, if you do that, the door is wide open (IMHO) for type

mismatch in communicating with a host language not strongly typed. (your demo doesn't work if c column of table t is declared of type

INTEGER (and also of type VARCHAR)).

- However, I agree on the core point : if substitution is done by SQLite internals or by Tcl inside a proc, and the context

of SQL query execution can't link the variable to be substituted to a specific column's type declared in the schema,

then type mismatch can occur (never observed this at Tcl level outside a procedure (and adhering strictly to SQL syntax - ie

quotes around tcl variables of type string)).

To be complete, a partial remedy has been recently provided on SQLite' side (release 3.28.0) with function method -returntype option (*)

(whose focus is however limited to tcl proc used in User Defined Function registered in SQLite)

(*) following a discussion of february 2019 a co-worker had with Mr Hipp on SQlite mailing list

jmc 2019-09-13 my above answer corrected with more precision.

PYK 2022-02-06: In Tcl there are no types, so there is no issue of type mismatch, and there is no inherent mismatch problem between Tcl and SQLite. Rather, it's an issue of The Tcl C interface to SQLite not conforming to the rules for interpreting Tcl values. It is proscribed to use anything other than the string value itself to determine the interpretation, except as an internal optimisation. Since the interface does conform to the rules for Tcl extensions, using this interface to work with SQLite can in some cases be problematic. This is a bug which ideally would be corrected, although in practice it may not be due to concerns about existing code which relies on current behaviour. This particular bug can be alleviated by setting appropriate column type affinities, other bugs, such as the ByteArray bug mentioned on this page, cannot.

See also

- set v "" = bytearray object? , comp.lang.tcl, 2005

- D. Richard Hipp, dkf, ms, and kbk discuss the issue.

Sharing a Database Connection Between Tcl and C

AMG: First, create the SQLite database connection in Tcl. Next, pass the name of the created command to C code. Within the C code, call Tcl_GetCommandInfo() on the command name to get its associated Tcl_CmdInfo structure. The objClientData field is a pointer to struct SQLiteDb, whose guaranteed-first member is an sqlite3 pointer ready to be passed to the various SQLite3_* functions. Thus, the type of objClientData may be regarded as sqlite3 **.

There is no way to create the connection in C and then tell Tcl to use that existing connection.

Calling Arbitrary Tcl Commands from SQLite

AMG: The database function method can be used to register specific Tcl commands to be called from SQLite, like so:

proc testFunctionProc {args} {

set len 0

foreach word $args {

incr len [string length $word]

}

return $len

}

db function testFunction -deterministic testFunctionProc

db onecolumn {SELECT testFunction('hello', 'world')}To avoid having to make a named proc, you can use apply:

db function testFunction -deterministic {apply {{args} {

set len 0

foreach word $args {

incr len [string length $word]

}

return $len

}}}

db onecolumn {SELECT testFunction('hello', 'world')}If you want to blow the doors open wide to calling any Tcl command from SQL (thoroughly dangerous when executing untrusted SQL), make a one-size-fits-all "call" function. Its definition is particularly simple:

db function call {}

db onecolumn {SELECT call('string', 'length', call('string', 'cat', 'hello', 'world'))}Combining the above concepts, here's an approach that allows not only calling commands but also arbitrary Tcl scripts passed named parameters:

db function lambda {apply {{params body args} {

tailcall apply [list $params $body] {*}$args

}}}

db onecolumn {SELECT lambda('str', 'string length $str', lambda('args', 'string cat {*}$args', 'hello', 'world'))}

db onecolumn {SELECT lambda('args', 'string length [string cat {*}$args]', 'hello', 'world')}

db onecolumn {SELECT lambda('args', '

set len 0

foreach word $args {

incr len [string length $word]

}

return $len

', 'hello', 'world')}Though once you get fancy, or you use the same script more than once, you're better off creating regular SQL functions. This hack has multiple performance penalties: the Tcl script will have to be compiled each time it gets called (basically each row), and SQLite cannot make any assumptions about its result since -deterministic is not used. The "call" function above avoids the bulk of the cost (needing to compile the Tcl script) since it's a simple command prefix, but it still suffers a bit from not using -deterministic. Of course, that switch can be added, but only if you're sure you will only ever be calling Tcl commands whose results are wholly determined by their arguments.

Real-world example:

db function call {}

clock scan [db onecolumn {SELECT call('regsub', '\..*', timestamp, '') FROM ...}]Here, I'm removing the fractional seconds which sometimes appear in timestamps in my database, which are stored as DATETIME text strings, so that Tcl's clock scan can handle the result. I could also have written:

db onecolumn {SELECT call('clock', 'scan', call('regsub', '\..*', timestamp, '')) FROM ...}But that gets hard to read. Or:

clock scan [regsub {\..*} [db onecolumn {SELECT timestamp FROM ...}] {}]But because my actual "..." is many lines long, it's hard to match up the {} with the regsub. Hence, this compromise seems to be the sweet spot for readability in this one case.

INSERT or UPDATE (UPSERT)

PO 2022/06/07 - The following script tests 3 different ways of upserting a table.

Example timings on my machine are as follows:

> tclsh InsertCheck.tcl 0 0: 64993 rows (21) in 3581 ms > tclsh InsertCheck.tcl 1 1: 64993 rows (21) in 4387 ms > tclsh InsertCheck.tcl 2 2: 64993 rows (21) in 6964 ms

package require sqlite3

# Fastest mode has lowest number.

if { $argc == 0 } {

puts "Usage: $argv0 mode"

puts " mode 0: Use INSERT ON CONFLICT."

puts " mode 1: Check for existence (SELECT). Then INSERT or UPDATE."

puts " mode 2: Catch INSERT statement. If failure, UPDATE."

exit

}

set mode [lindex $argv 0]

set numInserts 500000

set numCommits 10000

set dbName "InsertCheckTestDatabase.db3"

file delete -force $dbName

sqlite3 myDb $dbName

myDb eval {CREATE TABLE "DownloadSites" (

"IP" VARCHAR NOT NULL PRIMARY KEY, \

"Host" VARCHAR, \

"FileCount" INTEGER NOT NULL \

)}

expr srand(0)

set startTime [clock milliseconds]

myDb eval { BEGIN TRANSACTION }

if { $mode == 2 } {

for { set i 1 } { $i < $numInserts } { incr i } {

set ip1 [expr {int (rand()*255)}]

set ip2 [expr {int (rand()*255)}]

set ip "${ip1}.${ip2}.3.4"

set retVal [catch {myDb eval {INSERT INTO "DownloadSites" VALUES( $ip, "host", 1)}}]

if { $retVal != 0 } {

myDb eval {UPDATE "DownloadSites" SET FileCount = FileCount + 1 WHERE IP = $ip}

}

if { $i % $numCommits == 0 } {

myDb eval { COMMIT }

myDb eval { BEGIN TRANSACTION }

}

}

} elseif { $mode == 0 } {

for { set i 1 } { $i < $numInserts } { incr i } {

set ip1 [expr {int (rand()*255)}]

set ip2 [expr {int (rand()*255)}]

set ip "${ip1}.${ip2}.3.4"

myDb eval {INSERT INTO "DownloadSites" VALUES( $ip, "host", 1) ON CONFLICT(IP) DO \

UPDATE SET FileCount = FileCount + 1}

if { $i % $numCommits == 0 } {

myDb eval { COMMIT }

myDb eval { BEGIN TRANSACTION }

}

}

} elseif { $mode == 1 } {

for { set i 1 } { $i < $numInserts } { incr i } {

set ip1 [expr {int (rand()*255)}]

set ip2 [expr {int (rand()*255)}]

set ip "${ip1}.${ip2}.3.4"

set ipHostList [myDb eval {SELECT * FROM "DownloadSites" WHERE IP = $ip}]

if { [llength $ipHostList] == 0 } {

myDb eval {INSERT INTO "DownloadSites" VALUES( $ip, "host", 1)}

} else {

myDb eval {UPDATE "DownloadSites" SET FileCount = FileCount + 1 WHERE IP = $ip}

}

if { $i % $numCommits == 0 } {

myDb eval { COMMIT }

myDb eval { BEGIN TRANSACTION }

}

}

}

myDb eval { COMMIT }

set endTime [clock milliseconds]

set total [expr $endTime - $startTime]

set numRows [myDb eval {SELECT COUNT(*) FROM DownloadSites}]

set maxIns [myDb eval {SELECT MAX(FileCount) FROM DownloadSites}]

puts "$mode: $numRows rows ($maxIns) in $total ms "

myDb close